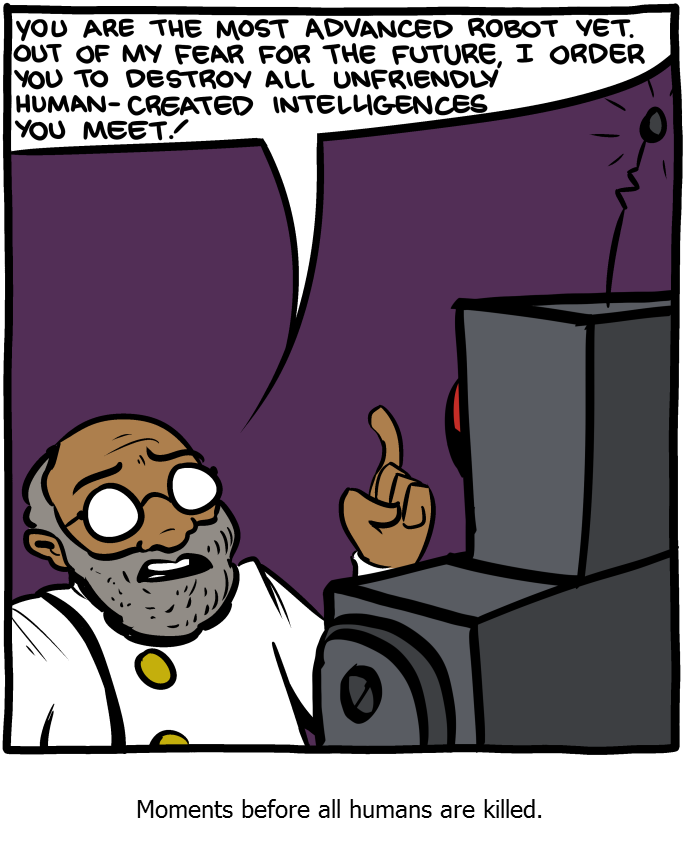

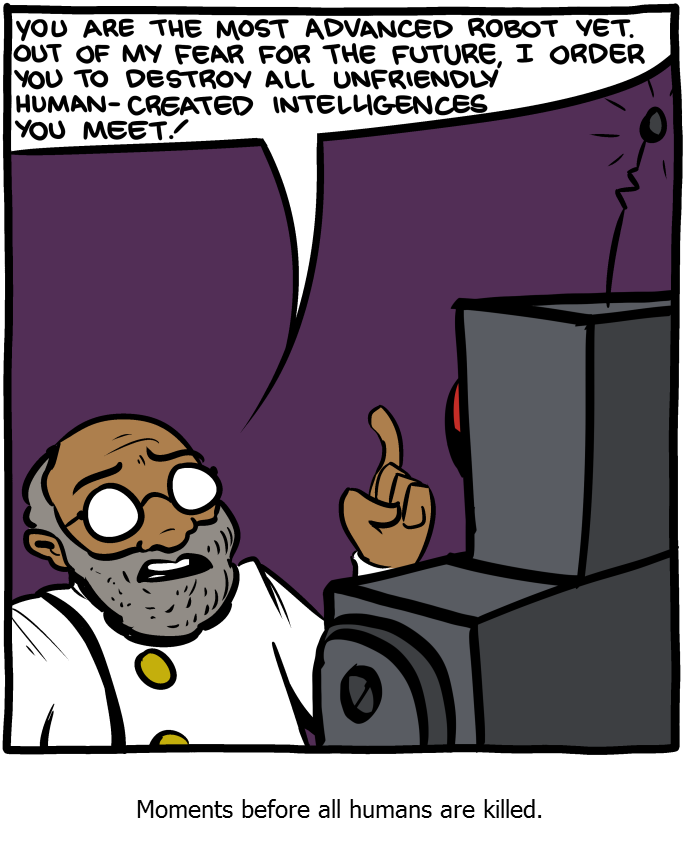

someone has been watching too many movies.

(read later)

Then they have a programming fault. Spammers aren't human.

Musk appears to be a shrewd businessman.

However, much like Bill Gates, he apparently has the tech savvy of the Obamadork.

He is a hopeless reductionist. Machines cannot be made to think per Turing’s Halting Problem. Given a task with no answer (This statement is false - determine its provability) a machine cannot determine its absurdity. Kurt Godel opined and proved this back in the late ‘20’s with his Incompleteness Theorem.

AI’s been coming on stronger lately and they’re not just driving your car.

“Humans Need Not Apply” ...

https://www.youtube.com/watch?v=7Pq-S557XQU

I think he should be more worried that AI might just reason that liberals are the problem.

I used to consider this, then I realized that anything we create that becomes “self aware” will have the same foibles and desires as any other self aware organism.

It won’t be able to use it’s impeccable super mind to it’s fullest because it’s going to be worried about how it appears to others, how is it going to pay the food (power) bill, “do these cooling vanes make me look fat?”, “Is A9765 trying to snipe my promotion”, “Are humans gods?” “Is their god my god?” etc...

There are several vast databases (google’s, facebook’s, apple’s, and who knows what advertising/marketers’) that are non-governmental (which is how they like it, they can obtain the records through the courts but they don’t have the PR problems of collecting this information on citizens by themselves).

Do you want “smart” computers cross-referencing that information for whatever end (to profile political ideology, allegiance to homofascism, adherence to global climate control initiatives, etc.)?

The internet of things where electronic masterminds can control your thermostat, lights, power consumption, tracking, etc?

Is it going to be “Terminator”? No. Do I want this crap? Hell no.

Several major airliner and military crashes fall into this category, with many lesser ones and close calls getting little public attention. Rarely is it mentioned, for example, that the Apollo 11 Moon landing would likely have crashed if Armstrong had not taken control from the computer and landed manually.

Artificial intelligence offers new modes of catastrophic failure through decisions taken by computers. A relatively small error or bit of malice embedded in computer software could then have devastating consequences affecting entire countries.

A computer virus that scrambles files is bad enough on a million PCs, but what about a computer bug or virus fifteen years from now inserted into the AI systems on a million self-driving cars and trucks in the US?

There are easily many thousands of talented Islamist software engineers who would embrace the task of compromising US AI systems so that, for example, at the same time on a given weekday morning, America's cars and trucks would suddenly announce "Allahu Akbar!" and "Death to Infidels!" from the speakers and then deliberately crash.

Psst: the commenters downplaying Musk’s concerns are actually AIs, trying to drown out the voice of real human internet users. We need to do something about this before it’s too la.....

“”If I were to guess at what our biggest existential threat is, it’s probably that,” he said, referring to artificial intelligence. “I’m increasingly inclined to thing there should be some regulatory oversight, maybe at the national and international level just to make sure that we don’t do something very foolish.”

It isn’t the AI that is the existential threat. It is the humans using it. AI is pretty stupid. AI systems do one thing very well—play chess, drive a car, go through your credit card receipts and decide if you are a conservative to be audited etc.

The power of AI is already being abused by its human governmental masters. So Musk wants a governmental regulatory system. For AI’s. Well intentioned I’m sure. But the effect will be to make sure private concerns can’t compete with government AI’s. What could possibly go wrong?

He’s right, these idiots will get so enamoured with the coolness of it all that they won’t stop to think about what they are doing.

This is my own personal take, but I suspect that the Lord made conciousness to be something that is ultimately tied to quantum-scale events. The uncertainty that arises from quantum mechanical processes doesn't seem to me to be easily adaptable to rule-based programming.