Skip to comments.

A SECOND LOOK AT THE SECOND LAW : CAN ANYTHING HAPPEN IN AN OPEN SYSTEM ?

Math Dept., Texas A&M University ^

| Granville Sewell

Posted on 10/19/2006 4:36:37 PM PDT by SirLinksalot

click here to read article

Navigation: use the links below to view more comments.

first previous 1-20, 21-40, 41-60, 61-63 next last

To: SirLinksalot

To: SirLinksalot

"In Appendix D of my new book [Sewell, 2005], I take a closer look at the equations for entropy change, which apply not only to thermal entropy but also to the entropy associated with anything else that diffuses, and show that they do not simply say that order cannot increase in a closed system, they also say that in an open system, order cannot increase faster than it is imported through the boundary. According to these equations, the thermal order in an open system can decrease in two different ways -- it can be converted to disorder, or it can be exported through the boundary. It can increase in only one way: by importation through the boundary. Similarly, the increase in "carbon order" in an open system cannot be greater than the carbon order imported through the boundary, and the increase in "chromium order" cannot be greater than the chromium order imported through the boundary, and so on."

Does anyone have the book?

From table of contents on amazon, one can see appendix D here:

http://www.amazon.com/gp/reader/0471735809/ref=sib_dp_top_toc/102-8612528-6088129?ie=UTF8&p=S007#reader-link

To: FreedomProtector

To: FreedomProtector; grey_whiskers

Based on that link, Sewell seems unaware that entropy is a state function. Randomness has little to do with the entropy; no given configuration is random, only processes are random.

44

posted on

10/23/2006 3:27:34 PM PDT

by

Doctor Stochastic

(Vegetabilisch = chaotisch ist der Charakter der Modernen. - Friedrich Schlegel)

To: Doctor Stochastic; grey_whiskers

Based on that link, Sewell seems unaware that entropy is a state function. Randomness has little to do with the entropy; no given configuration is random, only processes are random.

The thermodynamic state of a system is its condition as described by its physical characteristics. Temperature and internal energy are both state functions, the entropy function is a state function as well. Although normally expressed on a macroscopic scale (Clausius/Kelvin-Planck etc), it is also used in statistical mechanics, the microstate of that system is given by the exact position and velocity of every air molecule in the room.

Entropy can be defined as the logarithm of the number of microstates. The microstate and macroscopic scale can be shown to be related by "rigorously by using integrals over appropriately defined quantities instead of simply counting states".

Entropy is a description of the state of the position and velocity of the matter in the system. Configurations can be random or orderly.

From Physics for Scientists and Engineers, Third Edition, Raymond A Serway James 1992 Updated Printing

(undergraduate college level Physics book)

As you look around beauties of nature, it is easy to recognize that the events of natural processes have in them a large element of chance. For example, the spacing between treees in a natural forest is quite random. On the other hand, if you were to discover a forest where all the trees were equally spaced, you would probably conclude that the forest was man-made. Likewise, leaves fall to the ground with random arrangements. It would be highly unlikely to find the leaves laid out in perfectly straight rows or in one neat pile. We can express the results of such observations by saying that a disorderly arrangement is much more probable then an orderly one if the laws of nature are allowed to act without interference.

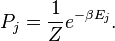

One of the main results of statistical mechanics is that isolated systems end toward disorder and entropy is a measure of disorder. In light of this new view of entropy, Boltzmann found that an alternative method for calculating entropy, S, is through use the the important relation

S = k ln W

where k is Boltzmann's constant, and W (not to be confused with work) is a number of proportional to the probability of the occurrence of a particular event..." ....several probability examples follow...... Consider a container of gas consisting of 10^23 molecules. If all of them were found moving in the same direction with the same speed at the some instant, the outcome would be similar to drawing marbles from the bag [half are red and are replaced] 10^23 times and finding a a red marble on every draw. This is clearly an unlikely set of events.

Temperature and internal energy are both state functions, the entropy function is a state function as well. Entropy is a description of the probability of a given configuration or a "logarithm of the number of microstates".

One of the main results of statistical mechanics is that isolated systems end toward disorder and entropy is a measure of disorder.

There are many more interesting properties of entropy the second law gives us one defines isentropic, adiabatic, irreversibility etc...

Sewell adds: "According to these equations, the thermal order in an open system can decrease in two different ways -- it can be converted to disorder, or it can be exported through the boundary. It can increase in only one way: by importation through the boundary."

Sewell's tautology that "if an increase in order is extremely improbable when a system is closed, it is still extremely improbable when a system is open, unless something is entering which makes it not extremely improbable" is a simply a more general statement.

Although, the application of vector calculus is obvious as boundaries are defined for thermodynamic systems, Sewell's mathematical deduction and resulting tautology is brilliant and gives us another interesting practical property of entropy deduced from the second law in addition to the ones related to isentropic, adiabatic, irreversibility etc which we already know.

Thanks for the ping, Doctor Stochastic!

To: FreedomProtector

it is also used in statistical mechanics, the microstate of that system is given by the exact position and velocity of every air molecule in the room. ???

Are we assuming no internal degrees of freedom (monatomic gases)?

Do you happen to remember the term "partition function"?

Cheers!

To: grey_whiskers

Sloppy sentence. My bad.

The intent was not to provide a comprehensive overview of statistical mechanics, merely an introduction to the relation of entropy to statistical mechanics.

Are we assuming no internal degrees of freedom (monatomic gases)? The author is merely assuming "air" and adds that it is a 'simple' example. While some elements of air are monatomic (ex noble gases), the other elements (obviously more) are diatomic elements hydrogen, nitrogen, oxygen etc...

"Entropy is a relationship between macroscopic and microscopic quantities. To illustrate what I mean by that statement I'm going to consider a simple example, namely a sealed room full of air. A full, microscopic description of the state of the room at any given time would include the position and velocity of every air molecule in it."

Wikipedia provides the example of a classical ideal diatomic gas.....

Do you happen to remember the term "partition function"? The partition function is still a statement of probability that a system occupies or does not occupy a particular microstate

Thermodynamics can be used to describe the probability of a certain arrangements of entities compared to another, and which general direction those arrangments are most likely to move in without interference. While the definition of orderly is vague, it is clear there are orderly arrangments which we observe which it is absurd to assume that a natural process organized them. "a forest where all of the trees were equally spaced, you would probably conclude that the forest was man-made" or "leaves laid out in perfectly straight rows or in one neat pile. We can express the results of such observations by saying that a disorderly arrangement is much more probable then an orderly one if the laws of nature are allowed to act without interference." from the

3rd edition of this book (The edition I own)

There is an Organizer outside of nature who interfered when the world was created.

To: grey_whiskers

A mathematical deduction beginning with the statistical mechanics definition of entropy to demonstrate the same statement as Sewell would be interesting.

"According to these equations, the thermal order in an open system can decrease in two different ways -- it can be converted to disorder, or it can be exported through the boundary. It can increase in only one way: by importation through the boundary."

quod erat demonstrandum would be satisfying statement at the end, I suspect the deduction would be fairly rigorous.

To: FreedomProtector

should be "some other elements" not "other elements"

To: Oberon

2. It's outside the realm of science to consider the existence of some intelligence that manipulates the mutation of organisms. As long as you're busy building false assumptions, here's another one. Science does this sort of stuff all the time, including with guided mutation of organisms. So it's clearly not outside the realm of science to do this stuff.

One wonders why science is therefore claimed to be utterly incapable of detecting what science can do. Dogmatic convenience, perhaps?

50

posted on

10/24/2006 8:24:55 AM PDT

by

r9etb

To: Doctor Stochastic

Randomness has little to do with the entropy; no given configuration is random, only processes are random. With a name like Dr. Stochastic, you come up with this? C'mon, doc.... Suppose there's a configuration C(t), subjected to a random process. Are you really gonna make the claim that you know C(t+1) with probability=1? Because that's what your comment seems to be saying.

51

posted on

10/24/2006 8:30:40 AM PDT

by

r9etb

To: r9etb

One wonders why science is therefore claimed to be utterly incapable of detecting what science can do. Dogmatic convenience, perhaps? No, that wassn't my point at all. I didn't mean to address the possibility or lack thereof of manipulating genes; I meant to point out that the minute you postulate an intelligence manipulating speciation in the wild, you've gone out of science and into philosophy.

Nothing I said was intended as an ideological attack. Please don't take it that way.

52

posted on

10/24/2006 8:35:09 AM PDT

by

Oberon

(What does it take to make government shrink?)

To: FreedomProtector

To: r9etb

No. That's not what I said. I said that the configuration C(t+1) has the same entropy whether or not it is obtained from C(t) by random or non-random processes.

54

posted on

10/24/2006 9:01:53 AM PDT

by

Doctor Stochastic

(Vegetabilisch = chaotisch ist der Charakter der Modernen. - Friedrich Schlegel)

To: Oberon

I meant to point out that the minute you postulate an intelligence manipulating speciation in the wild, you've gone out of science and into philosophy. Nothing I said was intended as an ideological attack. Please don't take it that way. I didn't take it that way, actually. The question itself is directed toward the oft-cited claim that it's "philosophy" rather than science -- esentially a claim that identifying design is scientifically impossible. Aside from the obvious -- we do it all the time -- the claim itself appears to be unscientific, and based on the assumption that we can't detect it. It raises the question of whether or not it's cited as a matter of dogmatic convenience.

The problem with the claim is that it ignores the fact that doing the manipulation sits firmly in the realm of science. It makes very little sense to claim that science can do something, but is then incapable of then detecting what it did. It might well be difficult to do so, especially long after the fact. But an alleged inability to detect the results of scientific manipulation would be due to a weakness in the detecting science. And an inability to detect long-ago design is scientifically explainable as well.

If we do suppose that the detection of design really does belong in the philosophy dept., would it not then be logically necessary to relegate the identification of "non-design" to the same dept.?

55

posted on

10/24/2006 9:04:16 AM PDT

by

r9etb

To: r9etb

If we do suppose that the detection of design really does belong in the philosophy dept., would it not then be logically necessary to relegate the identification of "non-design" to the same dept.? Absolutely! In fact, I've made that very point on an ID thread just a few weeks ago. Naturalism is itself a faith.

56

posted on

10/24/2006 9:12:24 AM PDT

by

Oberon

(What does it take to make government shrink?)

To: Oberon

Absolutely! In fact, I've made that very point on an ID thread just a few weeks ago. Naturalism is itself a faith. I'd go about halfway with that ... absolute naturalism is a matter of faith, just as absolute creationism is.

But here in the real world, we've got a more complex reality where we know that some things occur naturally; and we know that it's possible for things to be designed for specific purposes -- including, increasingly, biological entities.

I recall once reading something by Einstein, about his thought processes in coming up with Special Relativity. His first step was to investigate the assumptions under which science was currently operating.

It seems to me that the assumption of "random processes only" is open to investigation -- certainly evolutionary biology treats it as a given. But it is up to those who do not consider it a "given" to establish sufficient grounds to revise the assumption.

57

posted on

10/24/2006 9:52:06 AM PDT

by

r9etb

To: Doctor Stochastic

No. That's not what I said. I said that the configuration C(t+1) has the same entropy whether or not it is obtained from C(t) by random or non-random processes.

If is it a closed system and a random process, it is unlikely to have the same entropy, it will likely have more entropy, and it will never ever have less.

Sewell adds that if it is an open system "According to these equations, the thermal order in an open system can decrease in two different ways -- it can be converted to disorder, or it can be exported through the boundary. It can increase in only one way: by importation through the boundary."

To: FreedomProtector

If is it a closed system and a random process, it is unlikely to have the same entropy, it will likely have more entropy, and it will never ever have less. No. Entropy is a state function. Random, non-random, deterministic, chaotic, quantum, spooky, etc., all processes lead to the same entropy for the same state. This is what Sewell seems to be missing in his appendix.

59

posted on

10/24/2006 3:15:14 PM PDT

by

Doctor Stochastic

(Vegetabilisch = chaotisch ist der Charakter der Modernen. - Friedrich Schlegel)

To: Doctor Stochastic

If is it a closed system and a random process, it is unlikely to have the same entropy, it will likely have more entropy, and it will never ever have less.

No. Entropy is a state function. Random, non-random, deterministic, chaotic, quantum, spooky, etc., all processes lead to the same entropy for the same state. This is what Sewell seems to be missing in his appendix.

I stated the second law of thermodynamics and you said "No"?

Of course entropy is a state function. It is a measure of the thermodynamic state at a particular point in time. If time x occurs before time y, the state at time x will always be have less or equal entropy to the state of entropy at time y, for a closed system.

"all processes lead to the same entropy for the same state"

The state changes with time. That is like saying the entropy at time x is the same as the entropy time x. Maybe all of your systems are assumed to already be at equlibrium, but even then the statement is a strech. Maybe you have invented a way to stop time (travel the speed of light), but I doubt it.

Navigation: use the links below to view more comments.

first previous 1-20, 21-40, 41-60, 61-63 next last

Disclaimer:

Opinions posted on Free Republic are those of the individual

posters and do not necessarily represent the opinion of Free Republic or its

management. All materials posted herein are protected by copyright law and the

exemption for fair use of copyrighted works.

FreeRepublic.com is powered by software copyright 2000-2008 John Robinson